Free Program Satisfaction Survey

50+ Expert Crafted Program Satisfaction Survey Questions

Measuring Program Satisfaction lets you uncover participants' true experiences, helping you boost engagement, retention, and outcomes. A Program Satisfaction survey gathers structured feedback on what resonates with your audience and highlights opportunities for improvement. Download our free template preloaded with proven questions - or visit our form builder to design a custom survey that fits your unique needs.

Trusted by 5000+ Brands

Top Secrets for a Game-Changing Program Satisfaction Survey

Launching a successful Program Satisfaction survey starts with clear purpose. You need to know why you're gathering feedback: to improve content, refine delivery, or boost engagement. When you pinpoint the goal, you'll craft questions that truly matter to your audience.

Feedback drives decisions. Organizations that tie responses to action plans see higher buy-in and retention. In fact, teams using regular satisfaction polls report more effective program tweaks, based on increased response rates and better insights.

Start by setting objectives and defining your target group carefully. A clear objective sets the tone - outline what you want to learn and who should answer each item. Check this Rutgers University guide for a step-by-step on defining goals and choosing data methods.

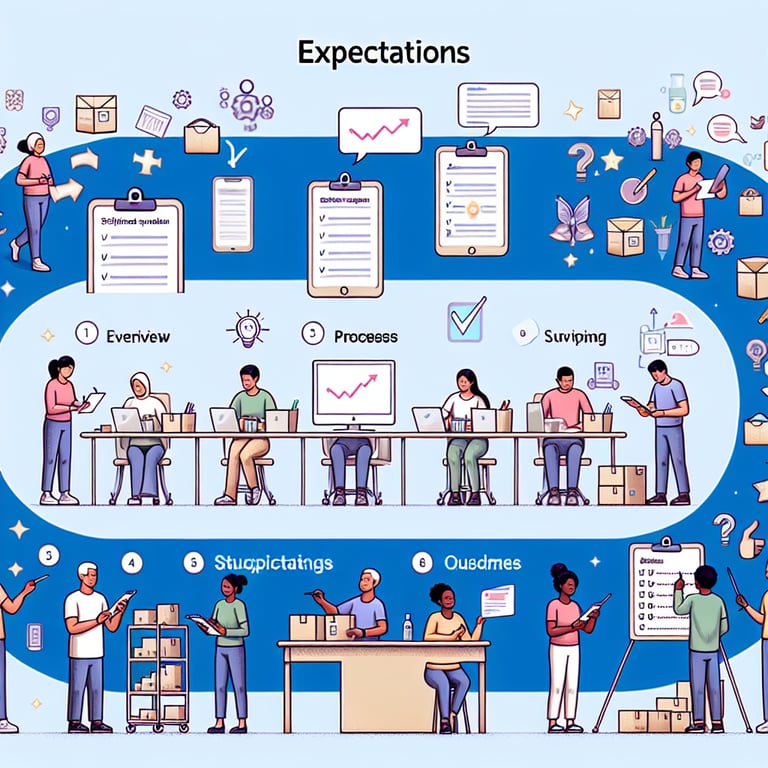

Then, structure questions to flow from broad to focused. Use plain language and avoid double-barreled items. James Madison University's survey primer offers tips on phrasing and pyramid logic. Don't forget to review Program Feedback Survey Questions for inspiration.

Pick the right mix of closed-ended and open-ended questions. Likert scales let you quantify satisfaction, while a few narrative prompts enrich context. This balance drives robust data and keeps surveys under ten minutes for higher completion rates (Design Basics: Part 3).

Before full launch, run a small pilot. Share it with a trusted subgroup and note any confusing language or skipped items. This step sharpens questions and reduces survey fatigue. It ensures you're ready to go live with confidence.

Imagine you run a community coding bootcamp and want quick wins. A simple poll shared at session's end can deliver instant insights. Ask "What aspects of the program did you find most valuable?" or "How likely are you to recommend this program to a peer?" and watch patterns emerge.

Once you gather answers, analyze themes and prioritize action items. Share results with stakeholders and repeat regularly. By treating your survey as a living tool, you'll refine experiences and boost satisfaction over time.

5 Must-Know Pitfalls to Dodge in Your Program Satisfaction Survey

Even the most thoughtful Program Satisfaction survey can stumble if you overlook common missteps. Small oversights compound into low completion rates, skewed results, and disappointed stakeholders. By spotting pitfalls early - like vague objectives or clumsy wording - you'll protect data integrity. Ready to sharpen your survey?

Skipping a clear objective tops the list of blunders. Without a defined target, you'll field irrelevant feedback that clouds decision-making. Draft concise goals before listing any questions and revisit them throughout design. This keeps your survey on track from first draft to final results.

Watch your phrasing for bias and complexity. Double-barreled or leading questions push respondents toward certain answers and weaken reliability. The SERVQUAL model underscores the importance of neutral, single-focus items. Rewrite questions until they feel fair and straightforward.

Choosing the wrong rating scale can skew your data. Too few points obscure nuances, while too many overload participants. A peer-reviewed Student Satisfaction Survey study confirms that 5- or 7-point Likert scales hit the sweet spot for clarity and comparability.

Balance closed and open-ended prompts to gather both numbers and narratives. Aim for one or two narrative items, such as "What improvements would make this program more effective?", to enrich your quantitative scores. Check our Program Evaluation Survey guide for structuring mix-and-match questions.

Don't ignore technical and design glitches - they kill response rates. Ensure mobile compatibility, clear navigation, and fast load times. Organize questions in a funnel: start broad, then narrow focus. A smooth experience earns trust and keeps participants engaged until the last click.

Pilot every iteration with a small group before the full roll-out. Note where respondents hesitate or drop off, then tweak accordingly. Honest feedback at this stage saves headaches later. By dodging these pitfalls, your Program Satisfaction survey will yield actionable insights that drive real improvement.

Program Content Quality Questions

This set of questions evaluates how well the program content meets learner needs and expectations. High-quality material drives engagement and knowledge retention, guiding continuous improvement in delivery and design. See our Educational Program Survey for more context.

-

How clear were the program learning objectives?

Understanding clarity of objectives ensures participants know expected outcomes. This insight helps refine goal statements for better alignment.

-

How relevant was the content to your professional or personal goals?

Assessing relevance measures alignment with learner needs. It highlights areas where material can be tailored or updated.

-

Was the difficulty level of the materials appropriate?

Proper difficulty ensures learners stay challenged without feeling overwhelmed. It informs adjustments to pacing and complexity.

-

How well did the program address real-world scenarios?

Real-world application boosts transfer of learning to practice. Identifying gaps helps incorporate relevant case studies.

-

Did the content flow logically between topics?

Logical sequencing aids comprehension and retention. This feedback guides restructuring of modules for smoother transitions.

-

How engaging were the presentations or modules?

Engagement levels indicate effectiveness of delivery methods. It drives adoption of interactive or multimedia elements.

-

Were the examples and case studies helpful?

Quality examples reinforce theoretical concepts with practical insight. This helps decide which cases to expand or replace.

-

How up-to-date was the information provided?

Current data and trends maintain program credibility. Identifying outdated content signals needed updates.

-

Did the program balance theory and practical application?

A balanced approach ensures conceptual understanding and skill development. Feedback directs emphasis adjustments between theory and practice.

-

Would you recommend the content to a colleague?

Recommendation likelihood reflects overall content satisfaction. It serves as a proxy for program advocacy and word-of-mouth growth.

Instructor Performance Questions

Instructor effectiveness is crucial for a successful learning experience, from delivery style to expertise. These questions help identify strengths and areas for improvement in teaching methods and communication. Refer to our Program Evaluation Survey for additional evaluation metrics.

-

How knowledgeable was the instructor about the subject matter?

Expertise instills confidence in learners. This feedback pinpoints training or selection needs for instructors.

-

How clear was the instructor's communication?

Clear communication ensures concepts are understood. It guides improvement of verbal and written explanations.

-

Did the instructor encourage participant questions?

Encouraging questions fosters interaction and deeper comprehension. It reflects on the instructor's facilitation skills.

-

How well did the instructor manage the session time?

Effective time management keeps sessions focused and on schedule. Insights help balance depth versus breadth of coverage.

-

Was the instructor responsive to diverse learning needs?

Responsiveness ensures all participants stay engaged. It highlights needs for alternative teaching strategies.

-

Did the instructor relate concepts to real-life examples?

Practical examples bridge theory and application. This feedback refines content relevance and engagement.

-

How effective were the instructional materials used?

Supporting materials complement the instructor's delivery. It identifies strengths or gaps in supplemental resources.

-

Did the instructor foster an interactive environment?

Interaction boosts participation and retention. Feedback informs facilitation techniques for future sessions.

-

How organized and well-prepared did the instructor appear?

Preparation enhances professionalism and flow. This question supports planning and rehearsal improvements.

-

Would you attend another session with this instructor?

Repeat attendance indicates instructor effectiveness and satisfaction. It helps identify the most impactful trainers.

Engagement & Interaction Questions

These questions assess the level of engagement and interaction throughout the program, essential for active learning and networking. Understanding participation dynamics offers insight into community building and peer collaboration. Explore our Customer Satisfaction Survey for similar engagement metrics.

-

How often did you participate in group discussions?

Participation frequency measures learner involvement. It drives strategies to increase interaction.

-

How effective were the group activities or workshops?

Activity effectiveness reflects hands-on learning value. Feedback informs design of future collaborative tasks.

-

Did you feel comfortable sharing opinions?

Comfort level indicates psychological safety in the learning environment. It helps improve inclusivity and trust.

-

How well did peers contribute to your learning?

Peer input enriches perspectives and knowledge sharing. This insight shapes group composition strategies.

-

Was there a good balance between lectures and discussions?

Balance ensures varied learning modalities. It informs adjustments to session formats.

-

How valuable were peer feedback sessions?

Peer feedback fosters reflective learning and growth. It highlights the need for structured feedback frameworks.

-

Did the program provide enough networking opportunities?

Networking can extend learning beyond sessions. Feedback identifies desired formats for connection.

-

How accessible were instructors during interactive segments?

Accessibility supports timely guidance and clarification. It informs instructor-to-learner ratios for live sessions.

-

Were online forums or chat functions useful?

Online tools enhance remote collaboration and support. This helps prioritize platform features.

-

Would you recommend this program's interaction format to others?

Recommendation signifies satisfaction with engagement design. It serves as a quality benchmark for collaboration features.

Resource and Material Satisfaction Questions

Resource availability and quality significantly impact the learning journey. These questions measure satisfaction with materials, tools, and support resources provided. For a similar resource focus, see our Software Satisfaction Survey .

-

How accessible were the course materials?

Ease of access ensures learners can study without barriers. Feedback highlights distribution or access issues.

-

Was the supporting software or platform easy to use?

User-friendly platforms reduce technical frustration. Insights guide platform selection and training needs.

-

How clear were the instructions for online resources?

Clarity of instructions prevents confusion and frustration. This directs improvements in documentation quality.

-

Did you receive materials in a timely manner?

Timely delivery supports pacing and preparation. It signals supply chain or logistical improvements.

-

How sufficient were the supplemental readings?

Supplemental readings reinforce core content. Feedback helps curate or expand reading lists.

-

Were multimedia elements (videos, audio) beneficial?

Multimedia can enhance engagement and retention. This information shapes investment in varied content types.

-

Was the technical support responsive when needed?

Responsive support minimizes down-time and frustration. It highlights staffing or resource gaps in help desks.

-

How comprehensive were the provided reference guides?

Comprehensive guides support independent learning. Feedback drives expansion or revision of reference materials.

-

Did you find the downloadable resources useful?

Downloadables extend learning beyond live sessions. This helps prioritize file formats and content types.

-

Would you like additional resource formats (e.g., audio, PDF)?

Request for different formats indicates diverse learning preferences. It guides future content production planning.

Overall Program Impact Questions

Assessing overall impact helps determine how the program influences participants' skills and goals. These questions focus on outcomes, satisfaction, and potential areas for program expansion. Check out our Satisfaction Questions Survey for a broader impact perspective.

-

To what extent did the program meet your initial expectations?

Expectation alignment indicates overall satisfaction. It directs program scope adjustments for future cohorts.

-

How likely are you to apply learned skills in your work or studies?

Application likelihood measures practical value. It informs follow-up support and collateral development.

-

Did the program enhance your confidence in the subject area?

Increased confidence reflects mastery and empowerment. This helps quantify learner outcomes beyond content mastery.

-

How valuable was the networking component for your career?

Networking value contributes to long-term professional growth. Feedback shapes future community-building efforts.

-

Would you enroll in an advanced version of this program?

Interest in advanced courses signals program pathway opportunities. It guides development of next-level offerings.

-

How satisfied are you with the overall program duration?

Duration satisfaction balances depth and time investment. It informs optimal scheduling for future sessions.

-

Did the program deliver satisfying ROI for your time and investment?

ROI perception reflects cost-benefit alignment. This feedback impacts pricing and value proposition.

-

How effective was the follow-up or post-program support?

Ongoing support sustains momentum and application. Insights guide design of alumni engagement strategies.

-

Would you recommend this program to peers?

Recommendation rates are a key advocacy metric. It serves as a strong indicator of overall success.

-

Overall, how would you rate your satisfaction with the program?

Global satisfaction captures the full participant experience. This question serves as a summary health check.